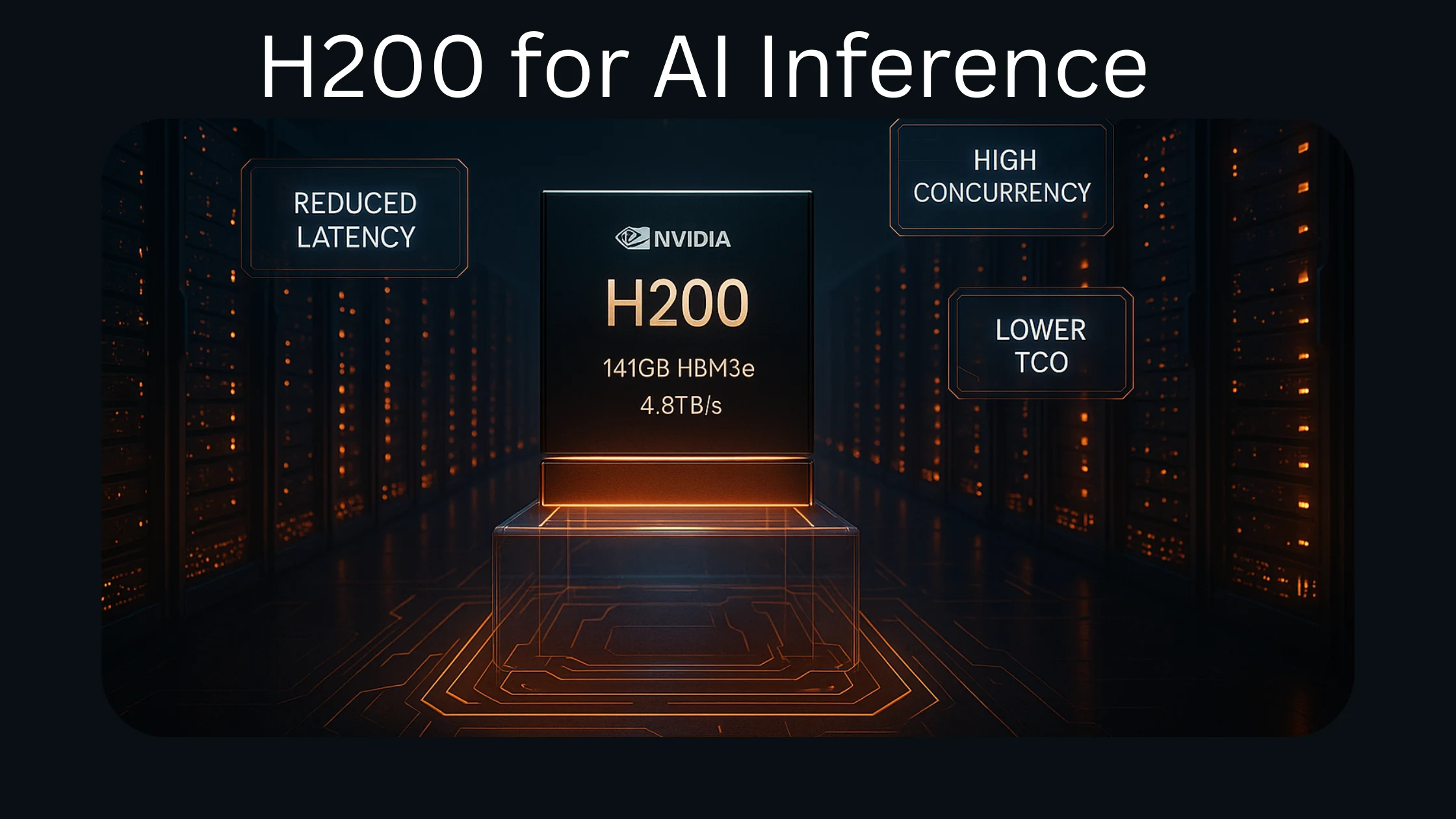

H200 for AI Inference: Why System Administrators Should Bet on the H200

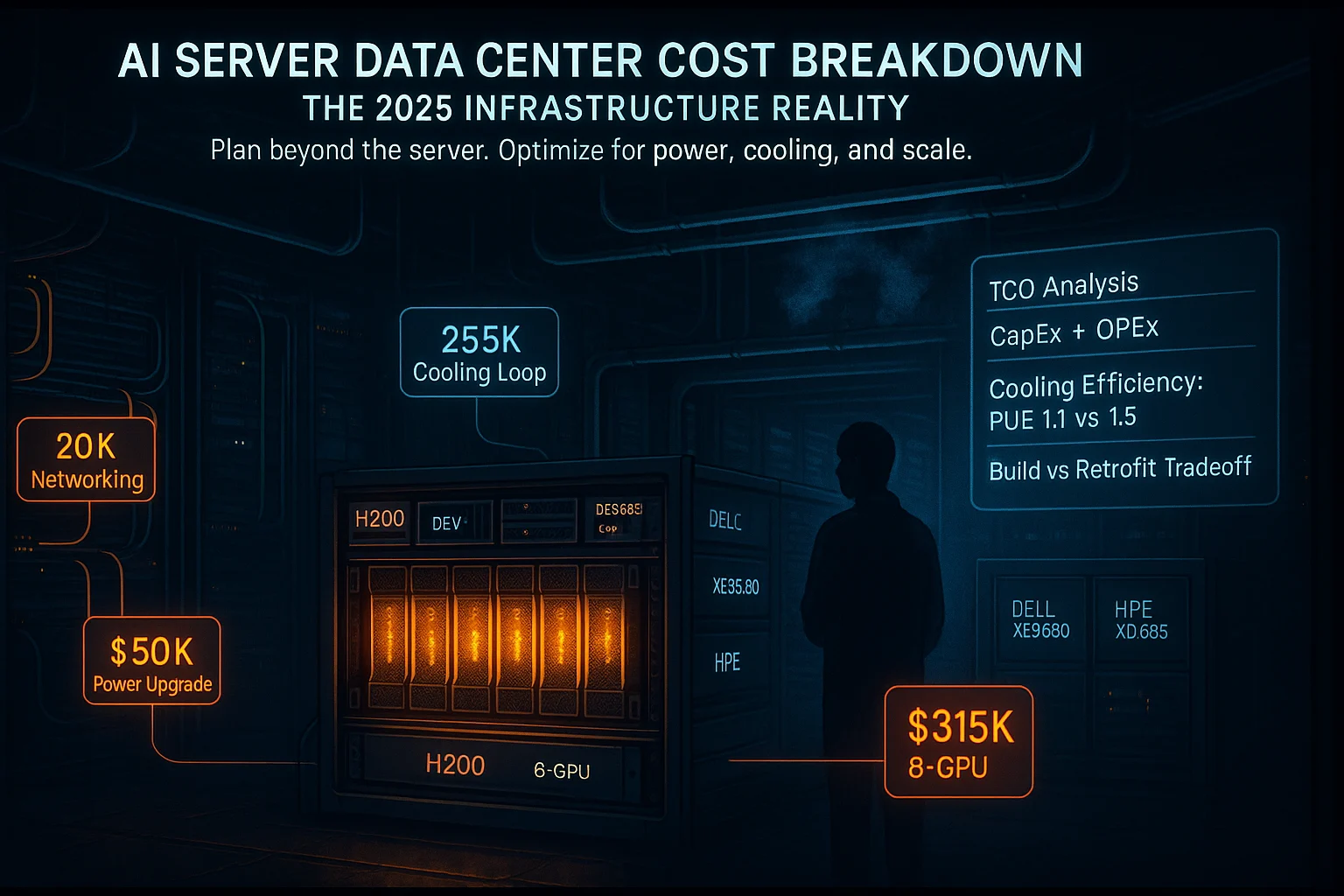

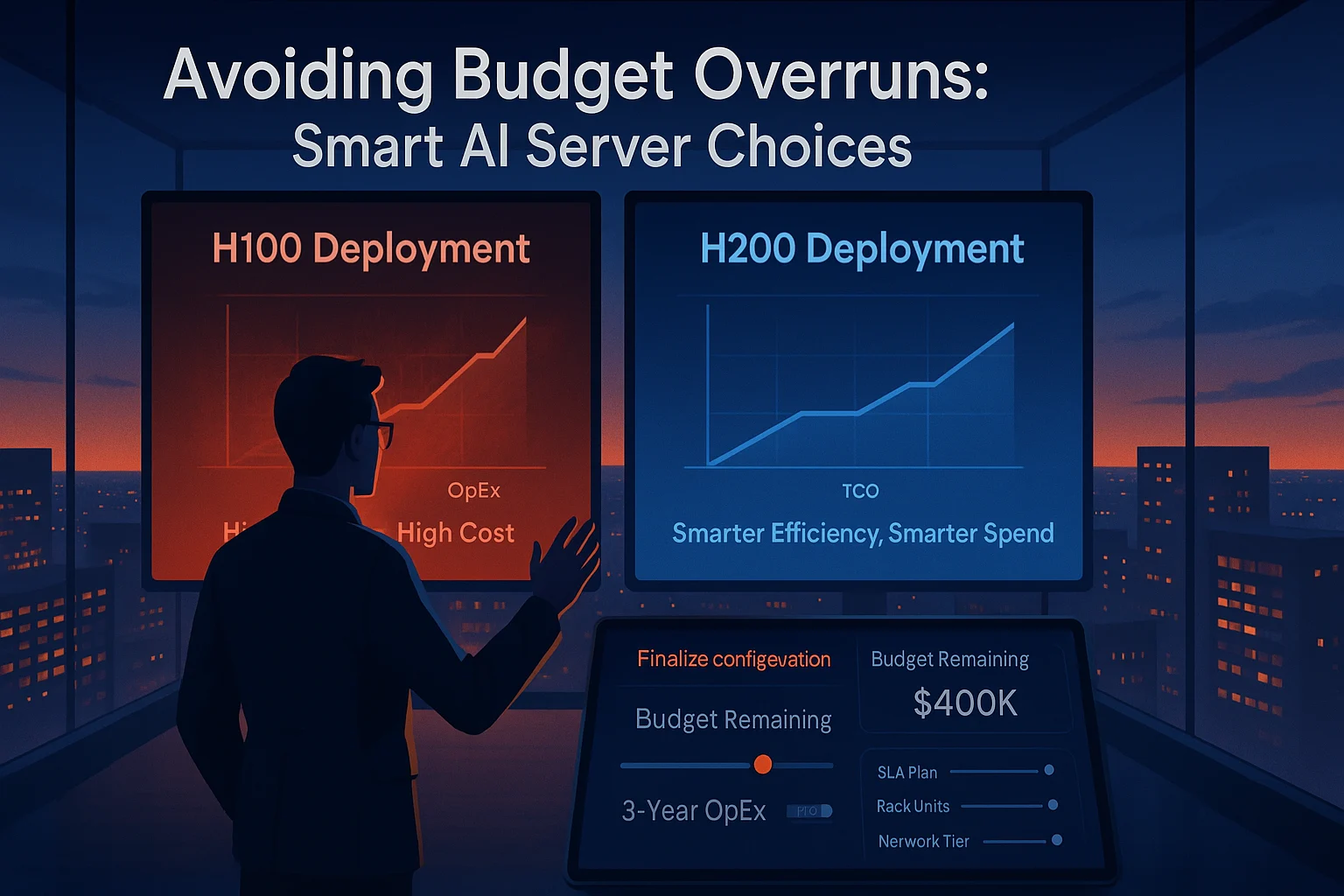

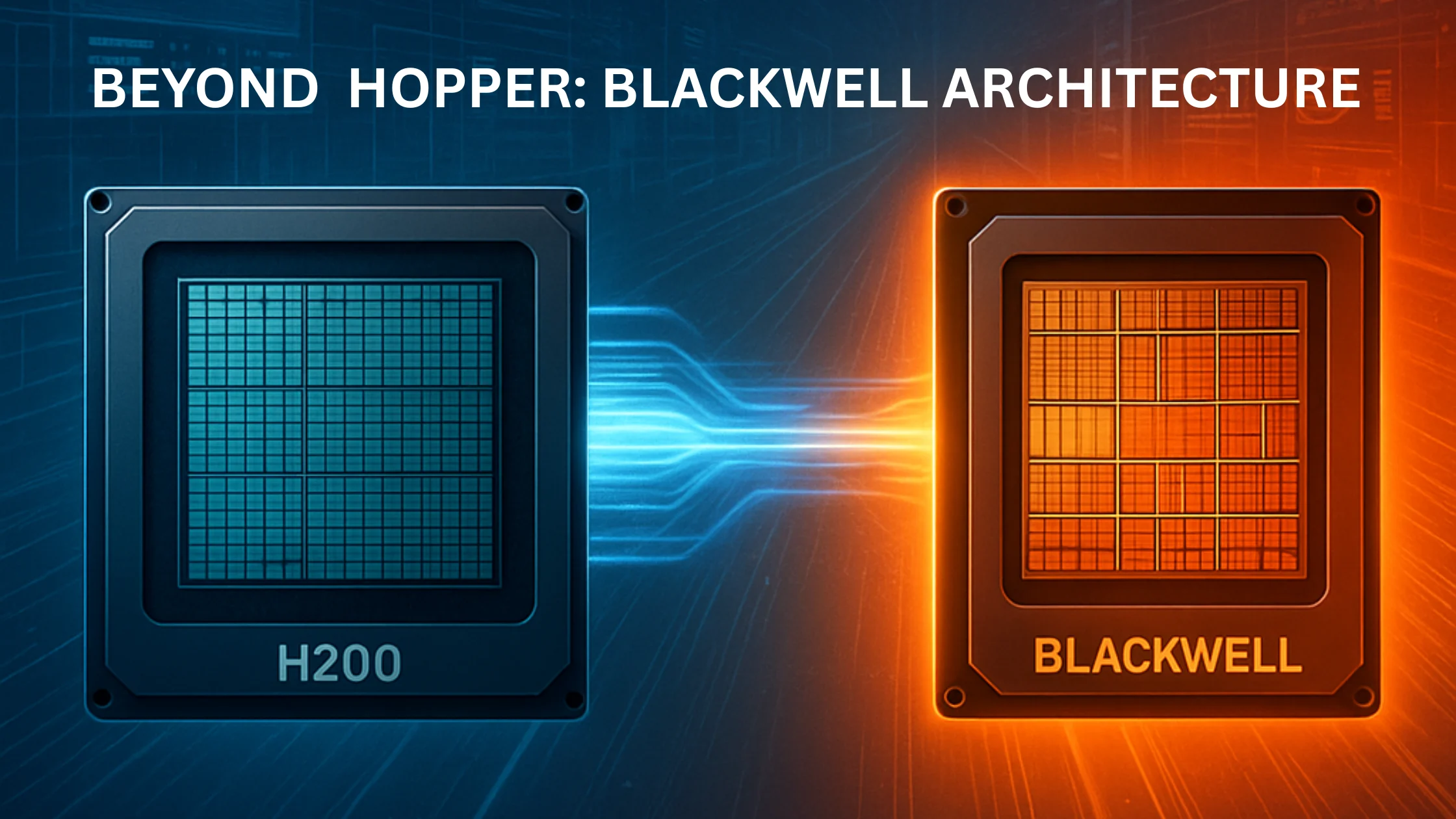

As AI services scale, system administrators face mounting challenges—memory bottlenecks, concurrency limits, and rising infrastructure costs. NVIDIA’s H200 GPU addresses these pain points head-on with 141GB of ultra-fast HBM3e memory and 4.8TB/s bandwidth, enabling smoother batch processing and lower latency for high-concurrency AI inference. Unlike traditional GPUs that force workarounds like model partitioning or microbatching, the H200 handles large language models like Llama 70B on a single card, doubling throughput over the H100. This translates to fewer servers, lower power consumption, and simplified deployments—all without needing to rewrite code or overhaul cooling systems. System administrators benefit from improved performance-per-watt, easier infrastructure management, and reduced total cost of ownership. Whether you're running LLM APIs, real-time analytics, or multi-modal AI services, the H200 is a strategic edge—purpose-built to turn memory and bandwidth into operational efficiency.

8 minute read

•Technology