NVIDIA DGX BasePOD™: Accelerating Enterprise AI with Scalable Infrastructure

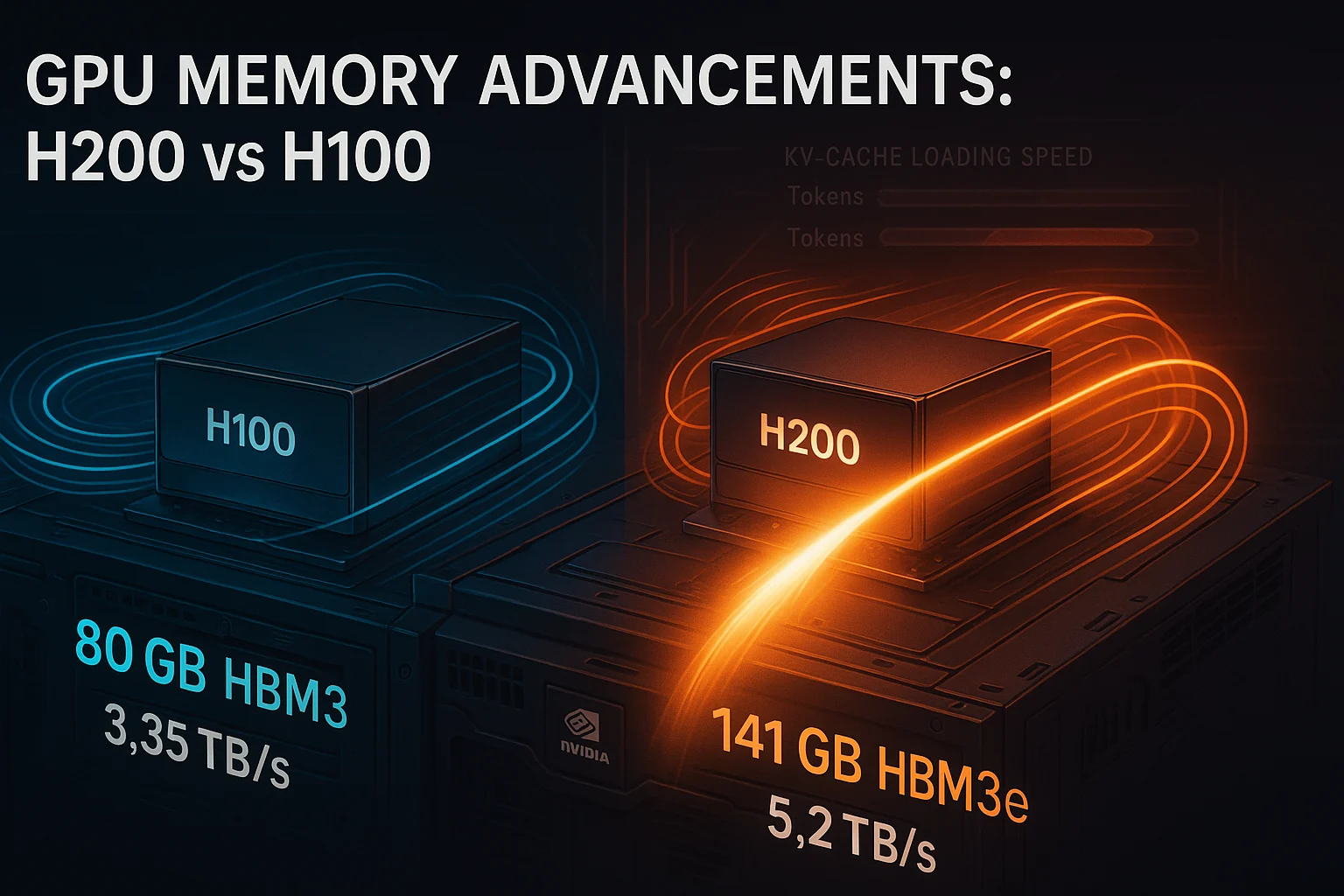

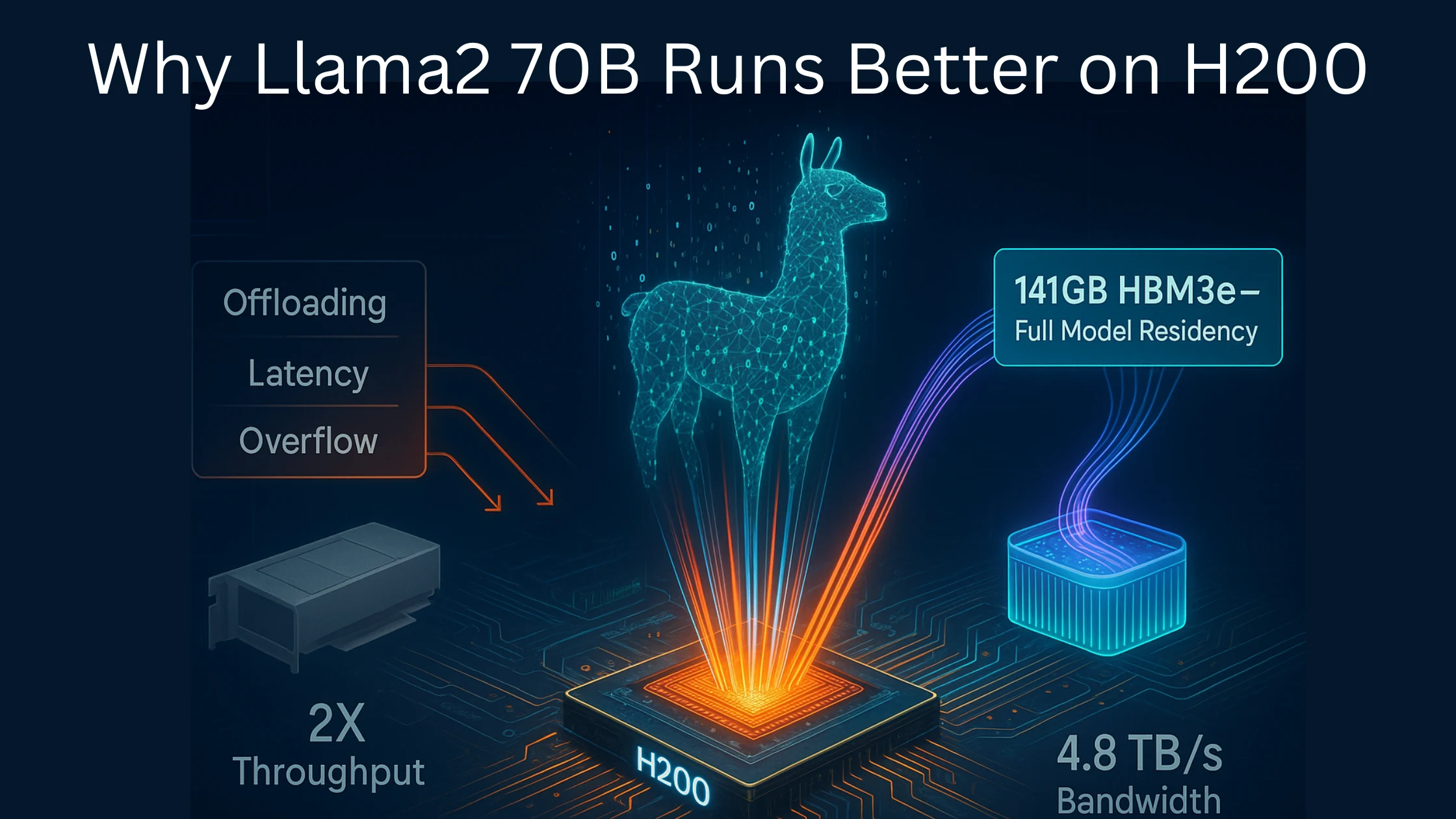

The NVIDIA DGX BasePOD™ is a pre-tested, ready-to-deploy blueprint for enterprise AI infrastructure, designed to solve the complexity and time-consuming challenges of building AI solutions. It integrates cutting-edge components like the NVIDIA H200 GPU and optimises compute, networking, storage, and software layers for seamless performance. This unified, scalable system drastically reduces setup time from months to weeks, eliminates compatibility risks, and maximises resource usage. The BasePOD™ supports demanding AI workloads like large language models and generative AI, enabling enterprises to deploy AI faster and scale efficiently from a few to thousands of GPUs.

11 minute read

•Energy and Utilities