Writing About AI

Uvation

Reen Singh is an engineer and a technologist with a diverse background spanning software, hardware, aerospace, defense, and cybersecurity. As CTO at Uvation, he leverages his extensive experience to lead the company’s technological innovation and development.

The DGX H100 system has historically served as the performance benchmark for AI training and inference, powered by the Hopper architecture. In contrast, the newly released DGX B200 system is built on NVIDIA’s Blackwell architecture, which marks a major advancement in infrastructure design. The DGX B200 is purpose-built with enhanced GPU-to-GPU interconnects, increased memory capacity, and higher computational throughput specifically to handle trillion-parameter models and real-time inference workloads.

The DGX B200 utilizes eight Blackwell B200 Tensor Core GPUs, moving beyond the DGX H100’s eight Hopper H100 Tensor Core GPUs. Blackwell GPUs are built on the refined TSMC 4NP process and feature an expanded 192GB of HBM3e memory per GPU, compared to Hopper’s 80GB HBM3 memory. Furthermore, Blackwell introduces a dual-die design, enabling the architecture to achieve approximately twice the throughput of Hopper for FP8 and FP16 workloads. This new generation also uses NVLink 5.0 connectivity, which doubles the GPU-to-GPU bandwidth to 1.8TB/s, compared to the H100’s NVLink 4.0 at 900GB/s.

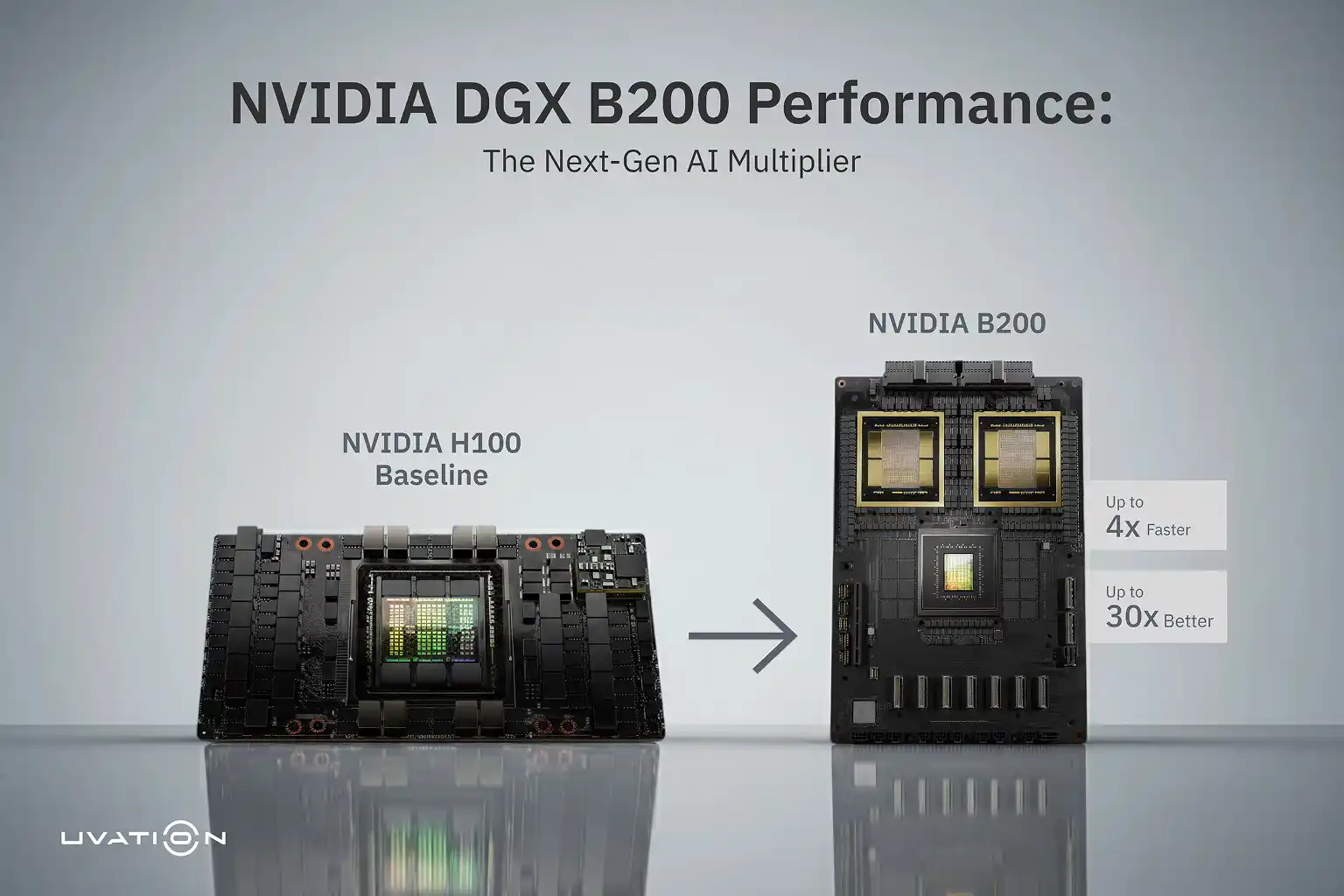

The DGX B200 demonstrates up to three times faster training performance than the DGX H100 when running large language models (LLMs) such as GPT and Mixtral. According to benchmark summaries, the B200 offers up to 4x the AI Training Throughput (LLMs) compared to the H100 baseline. This speed gain results from the combination of improved FP8 and FP16 compute performance, the expanded HBM3e memory, and the faster interconnect bandwidth. The Blackwell dual-die design enables data to flow more efficiently between compute units, reducing idle cycles during training and resulting in shorter training durations.

For inference workloads, the DGX B200 architecture introduces up to 15 times higher performance compared to the DGX H100. These substantial gains come from architectural refinements that maintain peak performance. The use of FP8 precision minimizes memory overhead and data movement, allowing inference pipelines to execute with significantly higher throughput and a lower energy draw.

The DGX B200 advances scaling capabilities by implementing NVLink 5.0 and NVSwitch 5, doubling the internal communication bandwidth to 1.8TB/s. The system’s enhanced NVLink memory coherence is a major advancement, enabling up to 576 maximum cluster GPUs to share data within a unified memory space. This allows AI training frameworks to treat large GPU clusters as a single memory pool, which improves resource utilization, reduces the need for frequent data replication, and supports faster convergence for complex AI models distributed across multiple nodes.

The Blackwell architecture implements features like fine-grained power gating and advanced support for FP8 precision to significantly improve energy efficiency per operation. Early data suggests Blackwell achieves about 1.7× better efficiency than Hopper when normalized to FP16 workloads, and the DGX B200 offers up to 30x better power efficiency for inference compared to the DGX H100 baseline. Since the DGX B200 delivers higher throughput and better per-watt efficiency, it reduces the electricity and cooling costs per model training or inference cycle, ensuring that energy metrics contribute to a lower operational expenditure over time.

The DGX H200 is considered an incremental evolution of the Hopper line, introducing features like HBM3e memory to bridge the gap between earlier Hopper systems and the newer Blackwell generation. While the H200 offers improved memory bandwidth and communication, the DGX B200 marks a true generational shift in scaling, architecture, and performance. With NVLink 5.0 and enhanced memory coherence, the B200 is better suited for the largest AI workloads, such as foundation model training and multimodal AI. Organizations launching new infrastructure often find it justifiable to move directly to the DGX B200 due to its superior suitability for longer-term demands, making the TCO benefits potentially outweigh the adoption of the intermediate H200 tier.

We are writing frequenly. Don’t miss that.

Unregistered User

It seems you are not registered on this platform. Sign up in order to submit a comment.

Sign up now