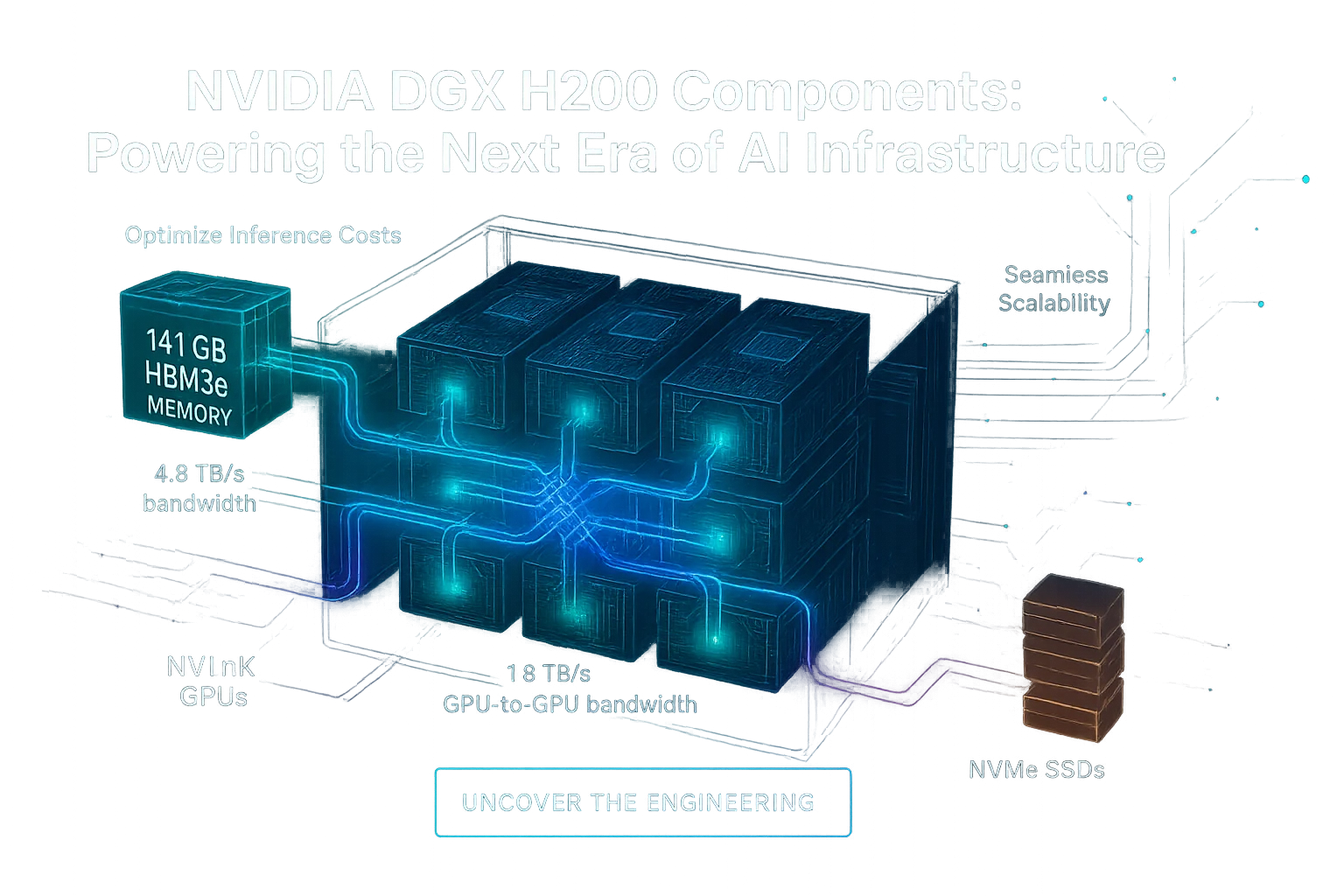

NVIDIA DGX H200 Components: Deep Dive into the Hardware Architecture

The NVIDIA DGX H200 is a carefully engineered system designed for next-generation AI infrastructure, integrating a convergence of GPUs, networking, memory, CPUs, storage, and power…

•