Cybersecurity Risk Assessment: How Managed Services Turn Unknown Threats into Measurable Business Risk

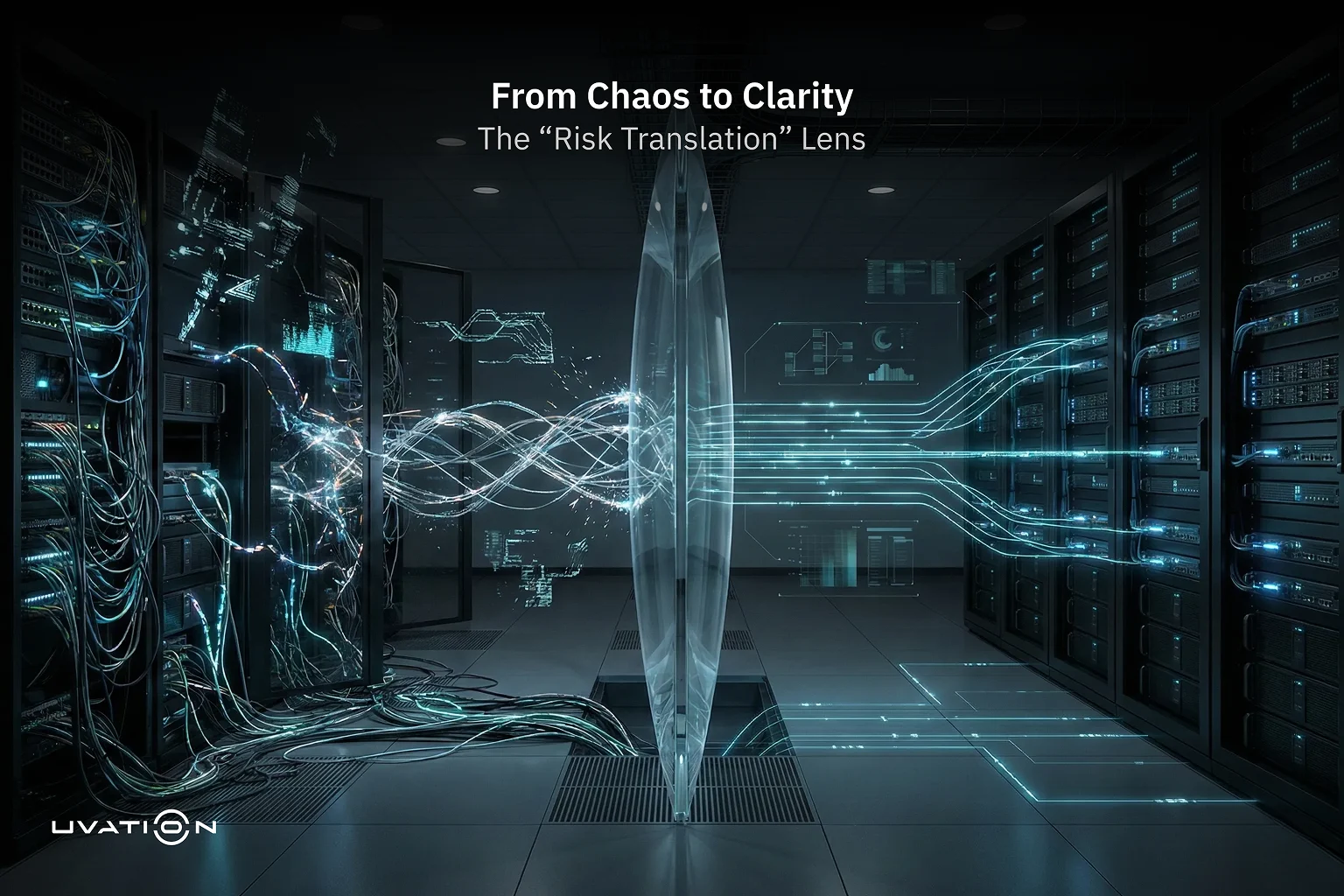

Effective cybersecurity risk assessments transform technical findings into measurable business exposure by evaluating assets, threats, and impact likelihood,. Yet, because attack surfaces and environments evolve rapidly, static, point-in-time assessments often fall short,. True insight requires treating risk assessment as a living process supported by managed security services. By integrating 24/7 monitoring, managed operations like Uvation’s validate theoretical risk models against real-world behavior, ensuring risk measurements remain current,. This continuous visibility bridges the gap between technical flaws and business outcomes, allowing leadership to prioritize risks based on evidence rather than outdated assumptions.

12 minute read

•