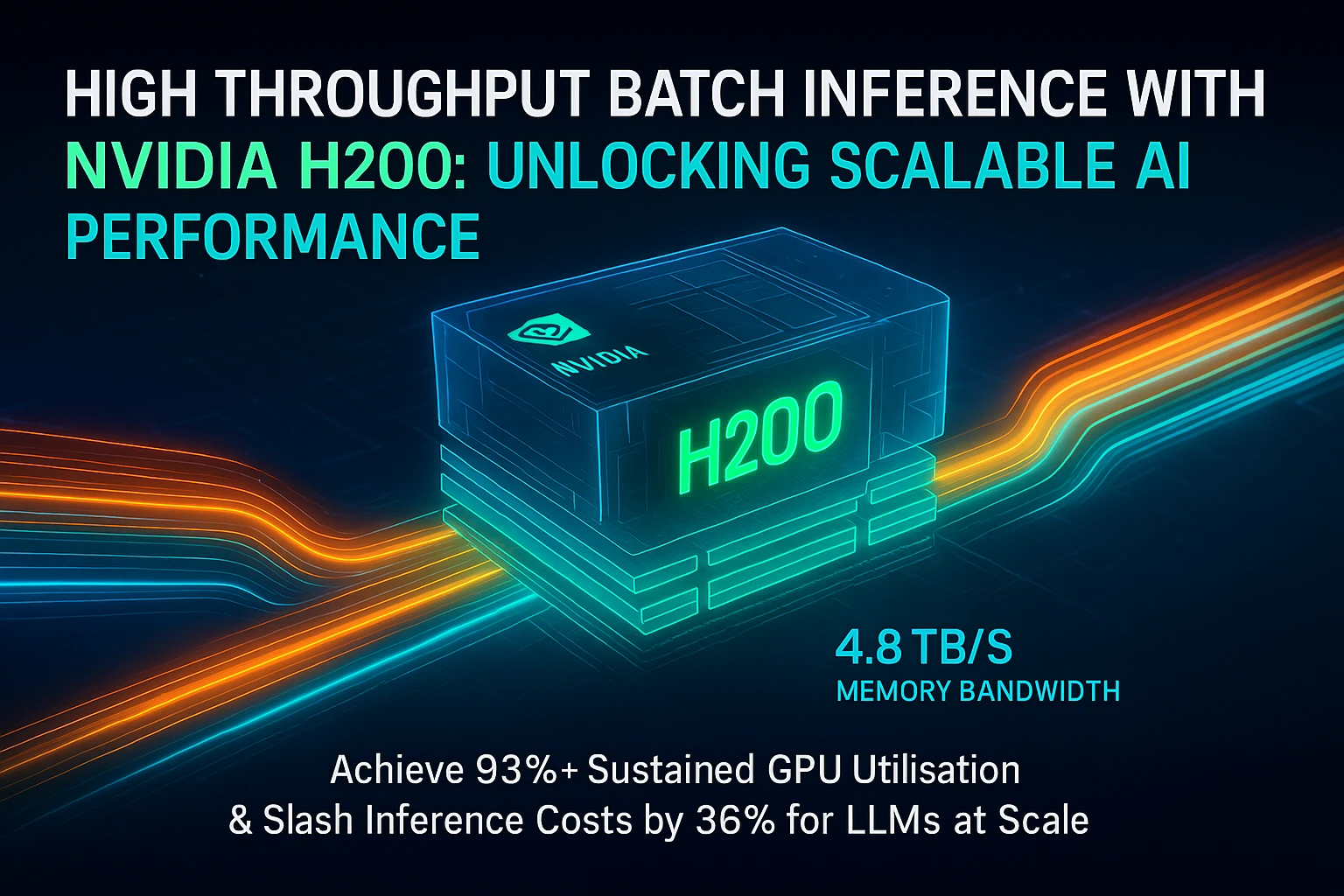

High Throughput Batch Inference with NVIDIA H200: Unlocking Scalable AI Performance

The NVIDIA H200 is crucial for high-throughput batch inference in scalable AI, addressing throughput (measured in tokens/sec) as the true bottleneck, rather than raw FLOPs. Its 141 GB HBM3e memory and 4.8 TB/s bandwidth, coupled with the FP8 Transformer Engine and NVLink + NVSwitch, enable efficient handling of large models and multi-batch inference, significantly increasing tokens per second.

Achieving this requires a “bandwidth-first” architectural design, involving memory-aware batch scheduling, network fabric optimisation (e.g., GPUDirect RDMA, InfiniBand), and orchestration using Kubernetes and NVIDIA Triton Inference Server. When correctly architected, H200 clusters deliver substantial performance and cost gains, including +33% sustained GPU utilisation, +81% tokens/sec, and reductions of 36-38% in inference and power costs. Uvation specialises in deploying these optimised, cost-effective GPU clusters.

5 minute read

•Applications