Writing About AI

Uvation

Reen Singh is an engineer and a technologist with a diverse background spanning software, hardware, aerospace, defense, and cybersecurity. As CTO at Uvation, he leverages his extensive experience to lead the company’s technological innovation and development.

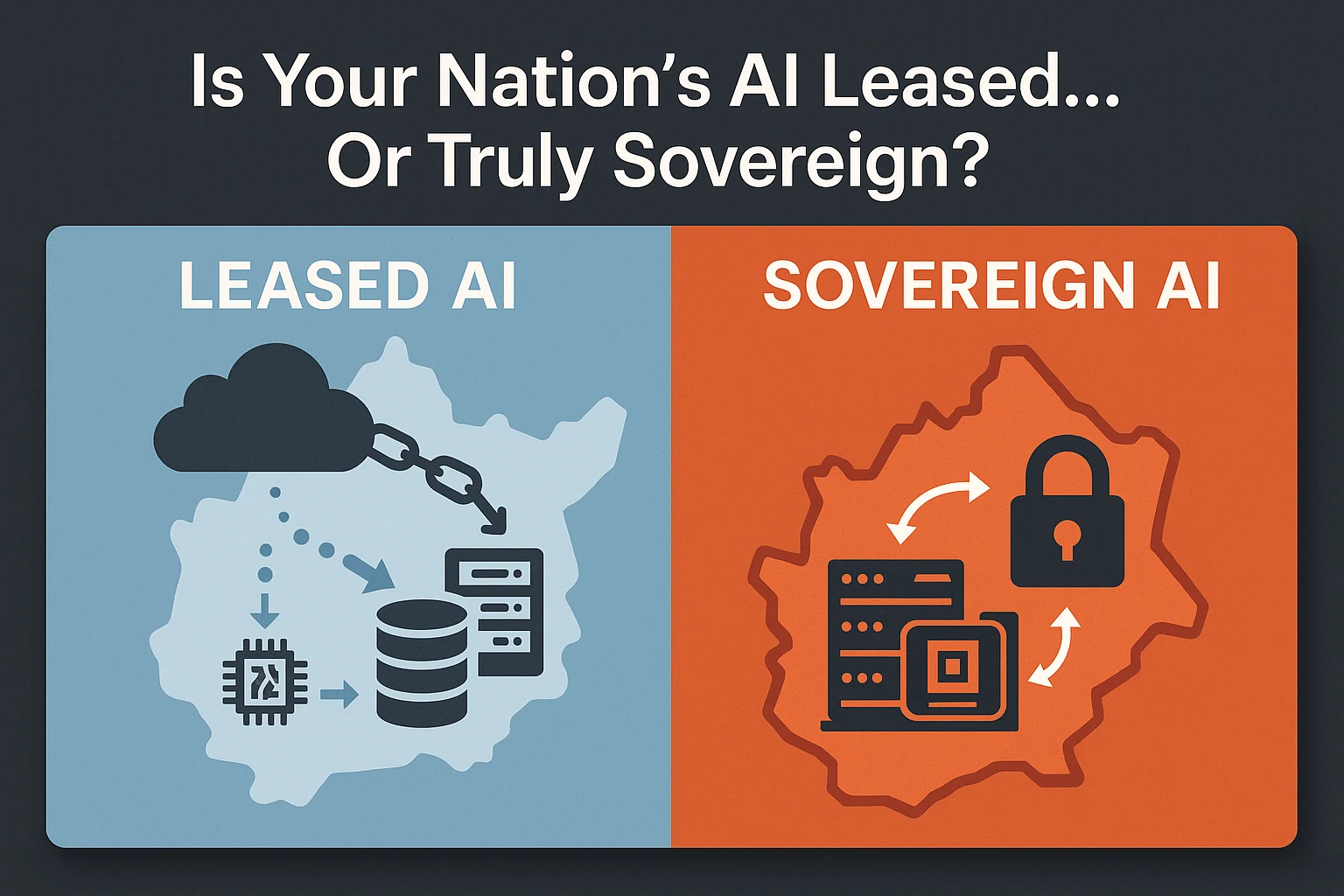

Sovereign AI goes beyond simply enacting laws and policies about artificial intelligence. It’s about a nation retaining tangible control over how AI is developed, governed, and deployed within its borders. This means owning and operating the entire AI stack—from data storage and processing to model training and inference—rather than relying on third-party cloud platforms or foreign infrastructure. Nations are increasingly recognising that without domestic compute capacity, their AI isn’t truly sovereign; it’s merely leased. This shift is driven by a desire to ensure data residency, prevent external influence, protect national security, and foster domestic innovation, as highlighted by initiatives like Europe’s AI Act and India’s Digital Public Infrastructure.

While policies, ethics, and data localisation are important components, they are insufficient on their own to achieve true Sovereign AI. The core issue is “who runs the AI stack?” If a country relies on external cloud providers for AI functions like inference, model hosting, or fine-tuning, it fundamentally lacks control. Real sovereignty requires domestic control over compute resources. This involves building dedicated “AI factories,” supercomputing initiatives, and on-premise solutions. Without local compute infrastructure, even the most robust policy frameworks are ineffective, as the actual processing and control of AI remain outside national purview.

Countries worldwide are making substantial investments to develop their national AI compute capacity. This includes:

Examples include Canada’s £1.2 billion (approx.) Canadian Sovereign AI Compute Strategy, the EU’s “InvestAI” initiative with a goal to triple AI compute capacity by 2027, and significant investments from countries like France, Germany, Japan, India, and Singapore in advanced GPU systems and large-scale AI infrastructure.

To make Sovereign AI operational and truly independent, control is needed at every layer of the AI stack:

This layered control ensures comprehensive sovereignty over the entire AI lifecycle.

Moving AI workloads off public clouds is considered the crucial first step in reclaiming control for Sovereign AI. Even a standard on-premise GPU server offers significant advantages over public cloud platforms:

On-premise infrastructure provides the essential baseline for sovereignty, acting as the “vehicle” to the “destination” of Sovereign AI.

Sovereign AI is being implemented across various critical sectors:

These applications demonstrate the practical benefits of controlling the AI stack end-to-end for national interests.

Uvation offers end-to-end solutions designed to help nations implement Sovereign AI. Their offerings include:

Uvation positions itself as a provider of fully managed, policy-compliant, operational AI stacks built for national scale, moving beyond just hardware to provide complete sovereignty solutions.

The core message is that Sovereign AI is fundamentally about choosing how and where a nation’s AI runs, rather than merely avoiding global AI. It emphasises that true sovereignty is built block by block and byte by byte, with infrastructure as its foundation. Any on-premise AI stack is a crucial starting point, providing a baseline of control. For future-readiness and advanced capabilities, deploying powerful hardware like the NVIDIA H200 is key. Ultimately, achieving Sovereign AI means having a fully managed, policy-compliant, and operational AI stack controlled domestically, ensuring national interests are prioritised in the age of artificial intelligence.

We are writing frequenly. Don’t miss that.

Unregistered User

It seems you are not registered on this platform. Sign up in order to submit a comment.

Sign up now