Writing About AI

Uvation

Reen Singh is an engineer and a technologist with a diverse background spanning software, hardware, aerospace, defense, and cybersecurity. As CTO at Uvation, he leverages his extensive experience to lead the company’s technological innovation and development.

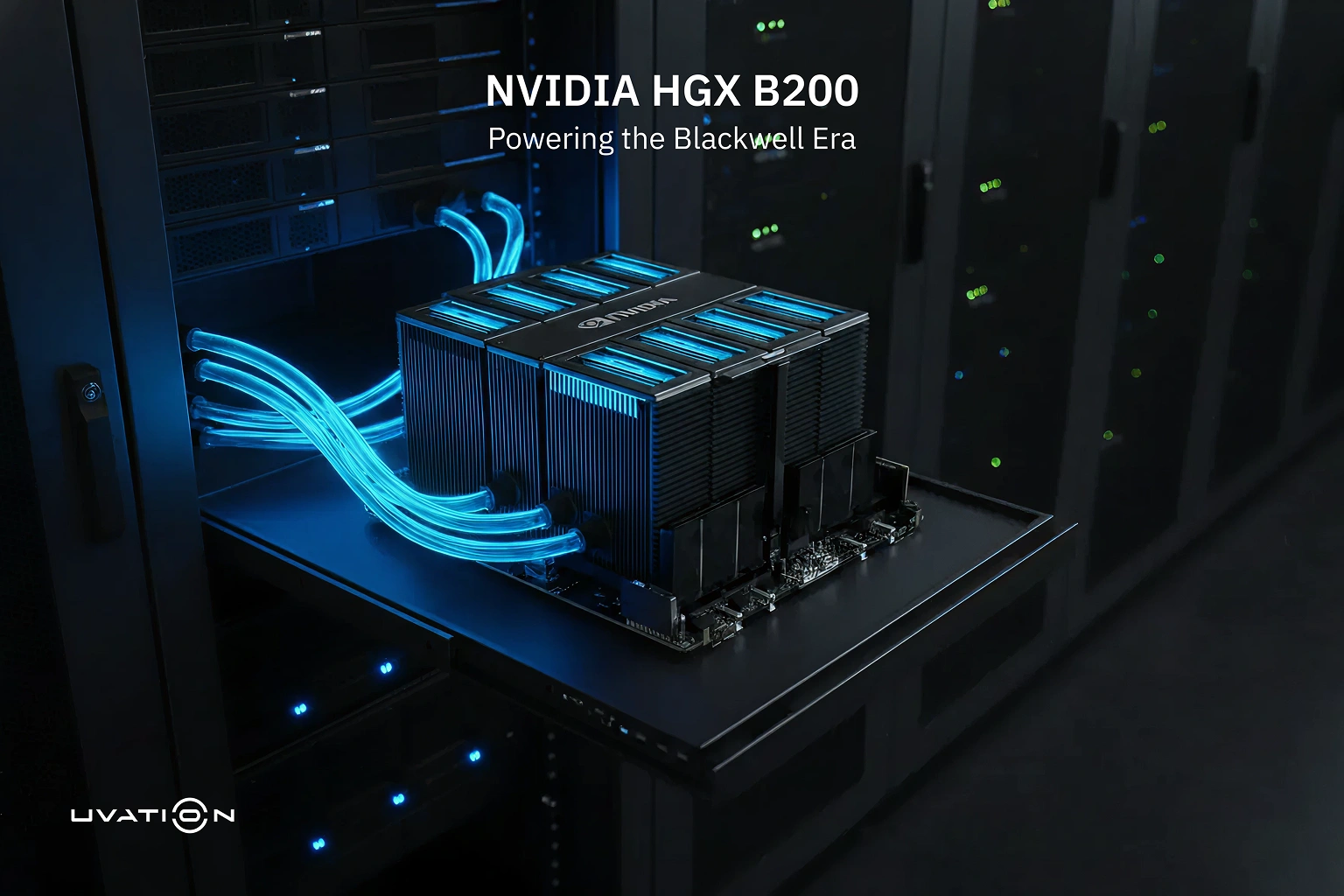

The NVIDIA HGX B200 is a high-performance eight-GPU platform built on the Blackwell architecture,. Unlike a standalone accelerator, it is a unified multi-GPU baseboard that integrates GPUs, high-speed NVLink interconnects, and system-level power and cooling support into a single design used by server manufacturers,. It is positioned as a top-tier solution for large-scale data centres where high compute density and efficiency are required for massive AI clusters.

This platform is engineered primarily for large-scale AI training and continuous inference, specifically for complex models like Large Language Models (LLMs) and multimodal systems,. Beyond AI, it is also highly effective for high-performance computing (HPC) tasks, such as scientific simulations and advanced data analytics, which require frequent and fast data exchange between multiple GPUs.

At the heart of the HGX B200 are updated tensor cores designed to handle mixed-precision math with high efficiency. It supports a variety of numeric formats, including FP4 and FP8 to maximise AI throughput, TF32 to accelerate deep learning without changing model code, and FP64 for high-precision scientific engineering,. These Blackwell-based GPUs deliver significantly higher tensor throughput than the previous Hopper generation.

To prevent data bottlenecks, the HGX B200 utilises HBM3e memory attached directly to each of its eight GPUs. Each GPU features roughly 180 to 192 GB of memory, resulting in a total capacity of nearly 1.4 TB per baseboard. This high-bandwidth, stacked memory design shortens data paths, ensuring that the compute units remain busy even when processing the largest parameter sets.

The system employs fifth-generation NVLink and NVSwitch technology to create a full all-to-all connection across the baseboard,. This provides a staggering 14.4 TB/s of GPU-to-GPU bandwidth, allowing GPUs to share data directly without the delays associated with routing through system memory. This high-speed fabric is essential for distributed training, as it reduces synchronisation delays and maintains steady utilisation across the entire platform.

The HGX B200 can deliver up to 3x faster training and up to 15x higher inference throughput for certain AI workloads compared to earlier Hopper-based systems. Beyond raw speed, it improves rack-scale efficiency by reducing energy intensity and embodied carbon emissions,. Because it packs more performance into a single platform, organisations can achieve their AI goals with fewer servers, lowering the total cost of networking, space, and power distribution. To understand the HGX B200, imagine a professional relay team where every runner is world-class, but the real secret to their record-breaking speed is a lightning-fast, invisible baton pass (NVLink) and a massive, shared hydration station (HBM3e) that ensures no one ever has to slow down to catch their breath.

We are writing frequenly. Don’t miss that.

Unregistered User

It seems you are not registered on this platform. Sign up in order to submit a comment.

Sign up now