Writing About AI

Uvation

Reen Singh is an engineer and a technologist with a diverse background spanning software, hardware, aerospace, defense, and cybersecurity. As CTO at Uvation, he leverages his extensive experience to lead the company’s technological innovation and development.

GPU memory has become the critical bottleneck in modern AI, particularly with the rise of large language models (LLMs) and generative AI (GenAI). While raw computational power (FLOPS) was once the main concern, the current limitation is how much data can be stored in-cache and how quickly it can be accessed. This shift is driven by the increasing complexity of AI tasks, such as handling large context windows (e.g., 128K tokens) in LLMs. When memory capacity or bandwidth is insufficient, it leads to “memory starvation,” causing latency spikes, reduced user concurrency, and a degradation of the user experience, making inference reliability and GenAI performance heavily dependent on memory capabilities.

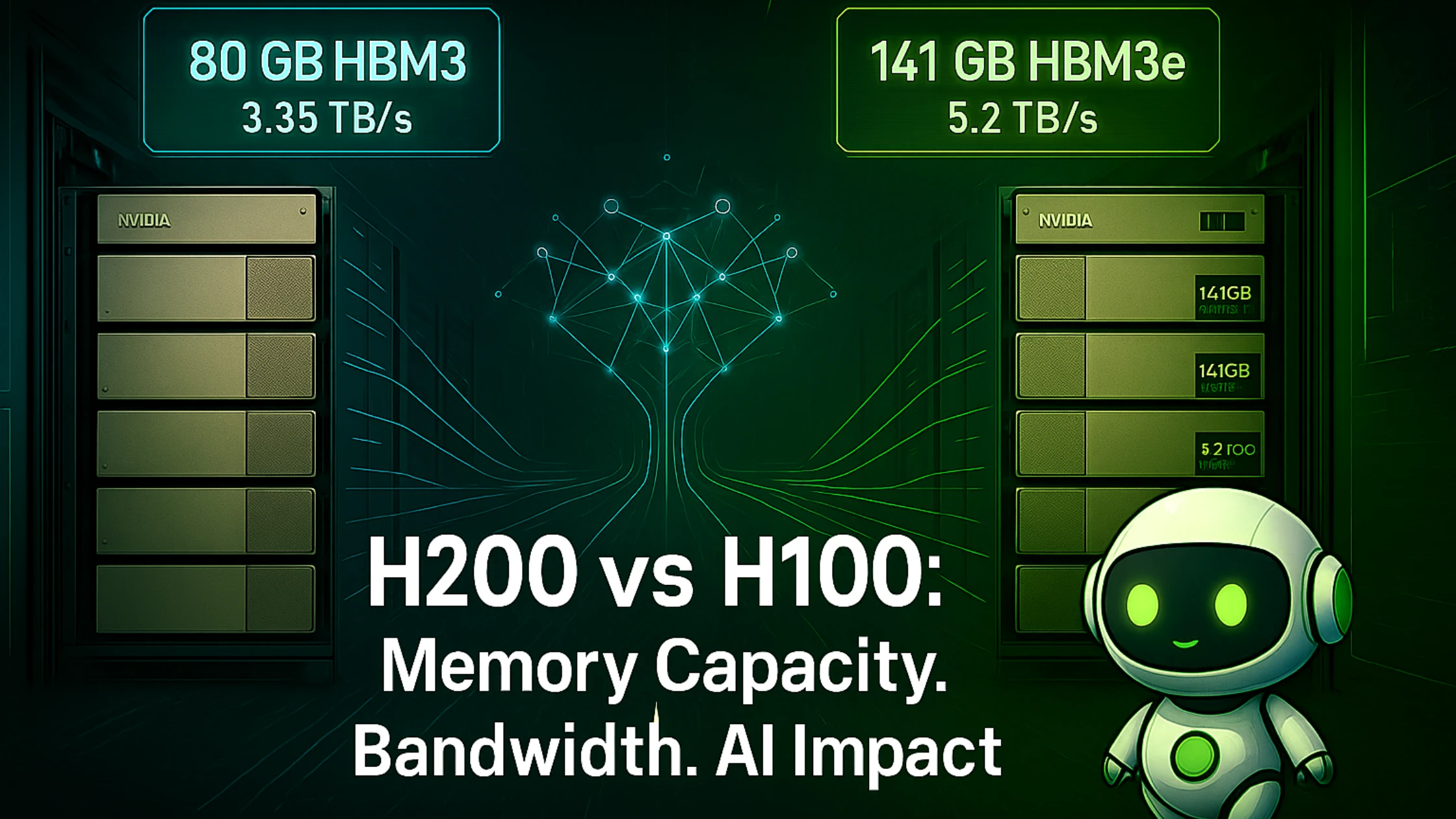

The NVIDIA H200 significantly advances GPU memory capabilities compared to the H100. The H100 features HBM3 memory with 80 GB capacity and a peak bandwidth of 3.35 TB/s, utilising a Gen 1 Transformer Engine and launched in 2022. In contrast, the H200 incorporates HBM3e memory, boasting a substantially larger 141 GB capacity and a higher peak bandwidth of 5.2 TB/s. It also includes a Gen 2 Transformer Engine and was launched in 2024. This means the H200 offers 76% more memory and 1.5 times the bandwidth of the H100, providing crucial “breathing room” for demanding AI applications.

The increased memory (141 GB HBM3e) and bandwidth (5.2 TB/s) of the H200 significantly enhance LLM performance. The larger memory capacity allows much bigger models, such as 70B LLMs with embeddings, to reside entirely in a single GPU’s memory, avoiding the need for multi-GPU splitting or slower paging that would occur with an H100. The higher bandwidth directly translates to faster token processing by accelerating the loading of KV-cache and context retrieval during attention operations. For instance, the H200 can reduce token-level latency by 32% for 64K token windows and 44% for 128K token windows compared to the H100, leading to smoother and more consistent responses, especially under heavy load.

For enterprise generative AI inference, the H200 offers several crucial benefits, particularly for applications like multi-tenant chatbot farms and RAG (Retrieval Augmented Generation) pipelines. Its 141 GB memory and NVLink 4.0 support enable consistent latency, higher session concurrency, and memory-persistent batching. This means the H200 can support over 160 concurrent users on models like Llama 2–13B and maintain persistent token context for multi-turn interactions. By reducing the need for cold starts and model duplication, the H200 helps lower licensing risks, improve cost controls, and simplify observability dashboards, ultimately leading to more efficient and scalable enterprise GenAI deployments.

Yes, high-performance computing (HPC) and FP8 (8-bit floating-point) training workloads can significantly benefit from the H200’s enhanced memory bandwidth. Applications such as CFD (Computational Fluid Dynamics) simulations, genomics pipelines, and hybrid FP8 workloads experience increased throughput. For example, in fine-tuning a GPT-3 13B model, the H200 achieved 9,400 tokens/second, a 1.5x improvement over the H100’s 6,200 tokens/second. The larger memory also aids in more efficient checkpoint management, facilitates large-batch training, and enables memory-efficient precision stacking, all of which contribute to faster and more robust training processes

Memory residency, or the ability of a model’s data (especially KV-cache) to fit entirely within the GPU’s memory, is a critical factor in GPU selection for AI workloads. If a model’s memory requirements exceed the GPU’s capacity, it necessitates multi-GPU splitting or slower data paging, which introduces significant latency and overhead. For example, a Llama 2–70B model requires approximately 120 GB of GPU memory, making the H200 (with 141 GB) suitable for single-GPU deployment, whereas an H100 (80 GB) would require a less efficient multi-GPU setup. Matching the GPU’s memory capacity to the workload’s memory demands is essential for optimal performance, consistency, and cost-efficiency, especially for real-time inference.

The H200 is best suited for memory-intensive AI workloads that demand high consistency, low latency, and large context windows, especially those involving multi-modal data or extensive RAG pipelines. This includes public generative AI applications requiring large token windows (e.g., 128K tokens with <100ms latency) and RAG + Vision GenAI for consistency with extra-large datasets. The H100 remains suitable for internal chatbots with smaller context windows (e.g., 64K tokens with <120ms latency) and for throughput-centric fine-tuning of models like 70B, which can effectively leverage multi-GPU training. Essentially, if a workload is memory-bound or requires handling massive datasets and maintaining user concurrency, the H200 is the preferred choice to avoid over-provisioning and ensure optimal performance.

KV-Cache residency refers to how much of the Key-Value cache (a crucial component in transformer models for storing past computations) can be held directly in the GPU’s memory. Higher residency means less need to offload data, leading to faster inference. It can be measured programmatically on an H200 using a Python script with PyTorch and the Hugging Face Transformers library. By loading a model like Llama 2–70B and performing an inference operation, you can print the maximum GPU memory allocated. For example, a typical query on an H200 for a 70B model might show around 120 GB of GPU memory used, indicating that the model’s KV-cache largely resides within the H200’s 141 GB capacity. This direct measurement helps confirm whether a specific model workload fits efficiently on the H200.

We are writing frequenly. Don’t miss that.

Unregistered User

It seems you are not registered on this platform. Sign up in order to submit a comment.

Sign up now