NVIDIA B300 Memory: The Advantage for Large AI Models

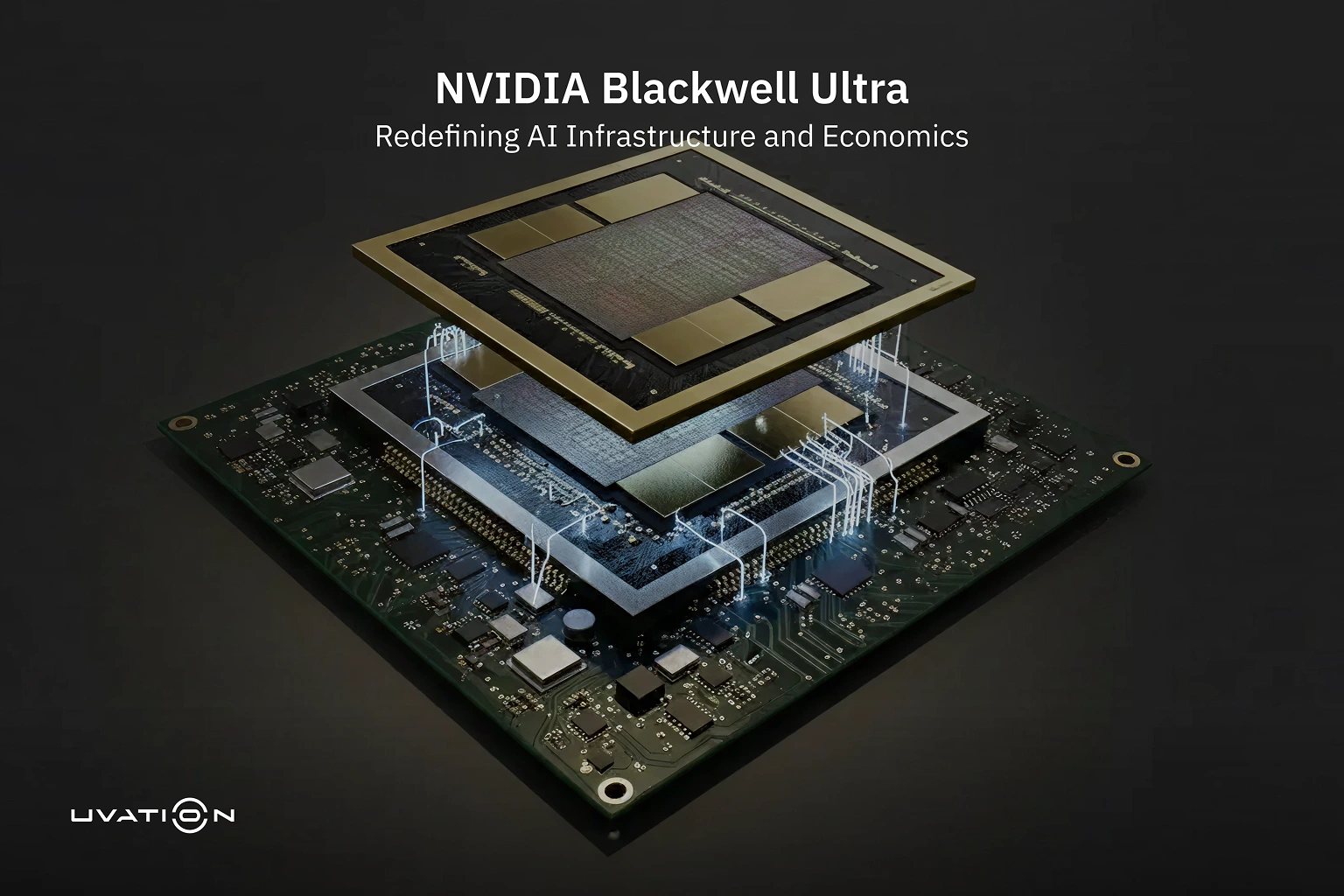

The NVIDIA B300 addresses the critical industry shift from compute-bound to memory-bound AI workloads, where performance is defined by data movement speed rather than raw FLOPS. Featuring 288 GB of HBM3e memory per GPU and a massive 8,192-bit bus, the architecture ensures large model parameters and activations remain in local memory, minimizing latency from external storage. This high-bandwidth configuration supports data-intensive tasks like longer context windows and Mixture-of-Experts (MoE) routing without stalling GPU cores. Furthermore, the B300’s on-package design places memory stacks on a shared substrate with the GPU, which reduces signal distance to lower power consumption and improve thermal efficiency. Ultimately, this architecture allows enterprises to scale model sizes efficiently, reducing total compute costs by decreasing idle time during training.

14 minute read

•