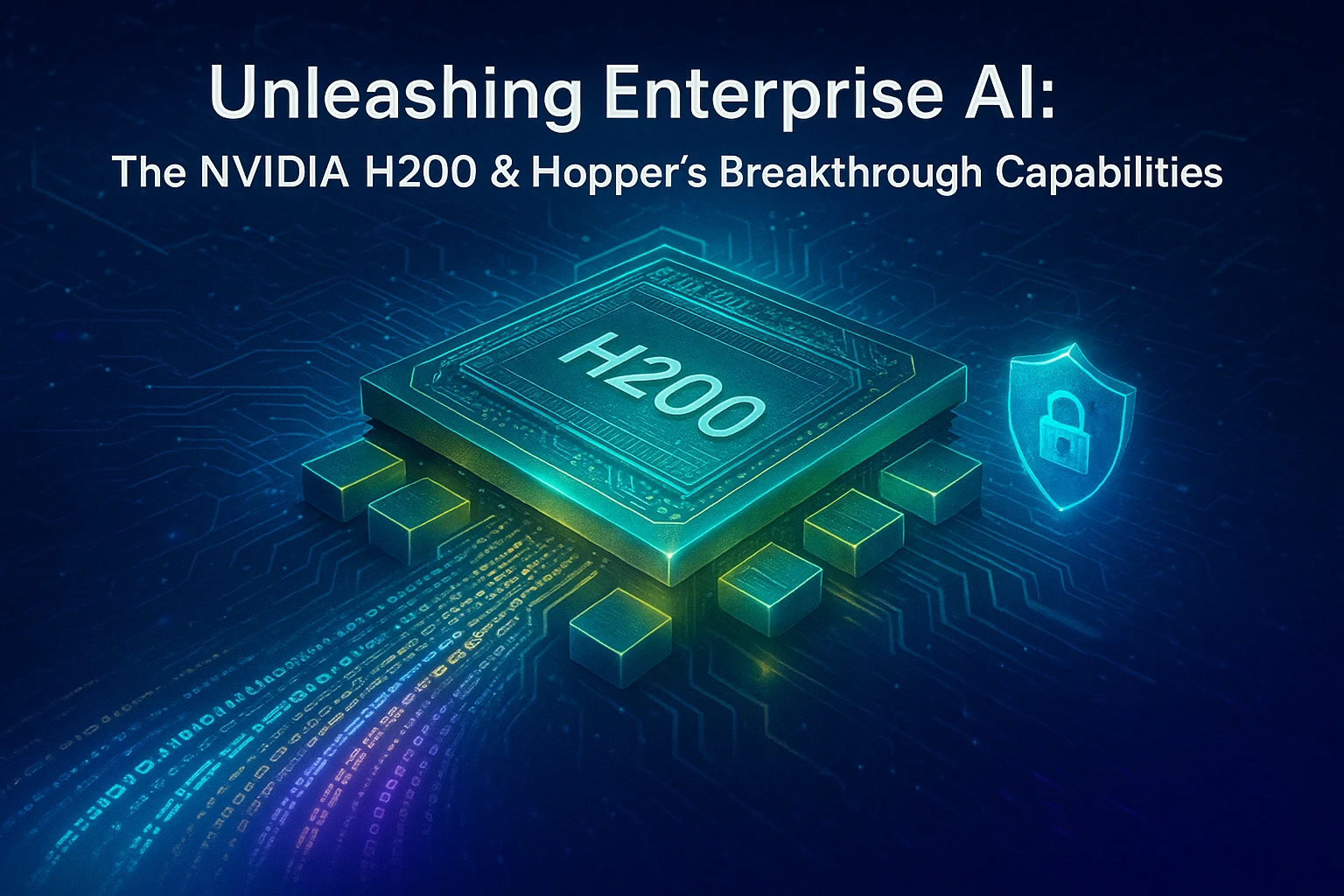

NVIDIA H200 Tensor Core GPU Technical Specifications: What It Means for AI Performance

The NVIDIA H200 Tensor Core GPU, part of the Hopper family, is engineered to accelerate generative AI, high-performance computing (HPC), and enterprise LLM workloads. It boasts 141GB of HBM3e memory and an industry-leading memory bandwidth of up to 4.8 TB/s, drastically enhancing AI throughput. Based on the NVIDIA Hopper architecture, the H200 features a Transformer Engine (Gen 2) for LLMs, MIG (Multi-Instance GPU) support for logical partitioning, and Confidential Computing for secure execution. Its compute capabilities are optimised for various workloads, offering FP8 Tensor Core performance of 3,958 TFLOPS (with sparsity) ideal for LLMs, and FP64 for scientific computing. These advancements support larger LLM batch sizes, real-time inference, and secure multi-tenant GPU environments.

4 minute read

•